Institutions and the Web done better

Introduction - (warning - old-timer indulgence)

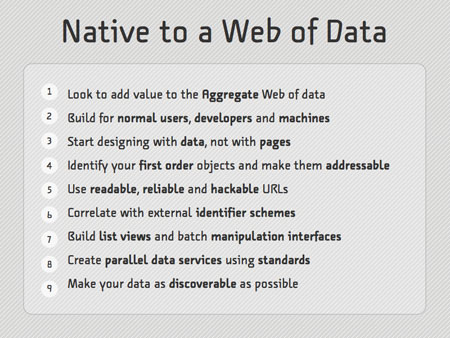

From the mid-nineties through to the end of 2006 I earned my living as a developer of Web applications, or as someone managing Web application development projects. I like to think I was quite good at it, and I certainly have a lot of experience. I worked with CGI writing in Perl and a little C, moving into ColdFusion and Java (via JServ - anyone remember that?), did the whole Java EE thing, undid it again, did SOAP because it was better than J2EE, undid that when we realised it actually wasn't.... In about 2002/3 I adopted a RESTful approach to building Web-based intranet applications - and some of those applications are, I believe, still being used. The idea that Web applications should be designed such that the functions flowed around the resources being manipulated, rather than the resources being moved about to enrich the functions, made absolute sense to me. I have not deviated from this general approach since then. In 2006, just before I joined UKOLN, I came across Tom Coates's ' Native to a Web of Data' presentation.

A Web of data

One slide in Tom's presentation really appealed:

Very recently I had cause to revisit this, and I began to wonder how this stacked up against current thinking. Over the last couple of years there has been a push to get Linked Data accepted by the mainstream, and there have been arguments over the extent to which this does, or does not, represent a tactic in advancing a Semantic Web agenda. I remain very skeptical about the likelihood of us realising a 'semantic' Web through the application of more and more structure, metadata, ontologies etc., and the aspiration toward a 'giant global graph' of data interests me little. However, even leading figures in the Semantic Web can be pragmatic - Tim Berners-Lee's ' 5 Stars of Open Linked Data' as reported by Ed Summers are somewhat less ambitious than the nine instructions of Tom Coates's Native to a Web of Data. I'm also jaded by the notion of the '(Semantic) Web Done Right'. The 'Web done right' is... the Web we have. That's the beauty of the Web - it works where many distributed information systems have not worked by taking a 'good enough' implementation of a really good idea and runs with it, at a massive scale. But, as ever, there is room for improvement - we can, and certainly should, aspire to a Web 'done better'.

Very recently I had cause to revisit this, and I began to wonder how this stacked up against current thinking. Over the last couple of years there has been a push to get Linked Data accepted by the mainstream, and there have been arguments over the extent to which this does, or does not, represent a tactic in advancing a Semantic Web agenda. I remain very skeptical about the likelihood of us realising a 'semantic' Web through the application of more and more structure, metadata, ontologies etc., and the aspiration toward a 'giant global graph' of data interests me little. However, even leading figures in the Semantic Web can be pragmatic - Tim Berners-Lee's ' 5 Stars of Open Linked Data' as reported by Ed Summers are somewhat less ambitious than the nine instructions of Tom Coates's Native to a Web of Data. I'm also jaded by the notion of the '(Semantic) Web Done Right'. The 'Web done right' is... the Web we have. That's the beauty of the Web - it works where many distributed information systems have not worked by taking a 'good enough' implementation of a really good idea and runs with it, at a massive scale. But, as ever, there is room for improvement - we can, and certainly should, aspire to a Web 'done better'.

From documents to data

The Web to date has been largely oriented towards humans manipulating documents through the use of simple desktop tools. Until relatively recently, this was mostly a read-only experience. However, it has been clear for some time that, when content is made available in some sort of machine-readable form, it lends itself to being re-used, especially through being combined with other machine-readable content. This echoes the experience of the document-oriented Web, where it soon became apparent that there was much value to be added by bringing documents together through linking. The data-oriented Web takes this a step further: the linking is still very important, but with machine-readable content in the form of data, the possibility exists to process the content remotely, after it has been published, to merge/change/enhance/annotate/re-format it. Recent years have shown how the Web can function as a platform for building distributed systems through the rise of the 'mashup' as an approach to building simple point-to-point services.

The institutional context

So, what does this mean for the Higher Education Institution (HEI)? The HEI tends to already have a large amount of Web content. An HEI of any size will also maintain significant databases of structured information. In more recent years, HEIs have adopted content management systems (CMS) of one sort or another, to manage loosely structured content. In some cases, such CMS systems are also used to expose structured information from back-end databases. It is still rare, however, for a typical institutional Web Team, using a standard CMS, to pay much attention to the sort of instructions listed above. HEI Web Teams tend to work in terms of 'information architectures', which often follow organisational structures primarily. Their tools, processes, and expectations from senior management make this the sensible approach. However this tends to mean that, periodically, the institutional Web site will be re-arranged to re-align with organisational changes. This approach to building an institutional Web site is driven by the imperatives of the document-centric Web. It's about trying to turn a large set of often very disparate documents into a coherent, manageable and navigable whole. The data-oriented approach demands a different approach. The following are a set of pointers to the shift in emphasis that is needed to allow HEIs to participate in the Web of data. These pointers are heavily influenced by Tom Coates's instructions, but I have condensed and rearranged them and tried to put them into the context of the needs of an HEI.

How HEIs can engage with a Web of data

1. Recognise the potential value of the Aggregate Web of data and invest/engage accordingly

The cost of making data available on the Web is falling steadily, as technology and skills improve and the fixed costs of infrastructure are also reduced. The act of making useful data available on the Web does carry a cost, but it also introduces potential benefits. On a simple cost/benefit analysis, it is becoming apparent that we will soon be needing to justify not making data available on the Web. The 'loss-leader' approach, of making data available speculatively, hoping that someone else will find a use for it to mutual advantage, is one which becomes viable as the costs of doing so become vanishingly small. A lesson learned from open-source software, where the practice of exposing software source-code to 'many eyes' is proven to help in identifying and helping to rectify mistakes or 'bugs', is applicable to data too. As a general principle, exposing data which can be combined with data from elsewhere is a path to creating new partnerships and collaborative opportunities. 'Useful data' can range from the sorts of research outputs or teaching materials which might already be on the Web, to structured contact details for academics in an institution, to data about rooms, equipment and availability. As an example, some institutions already exploit one of their assets - meeting and teaching spaces - by renting them out to external users, especially during holiday periods of the year. Making data about these assets openly available, in a rich and structured way, opens up possibilities for others to better exploit these assets, and for the HEI to share the benefits of this. In addition to this, we are witnessing a wholesale cultural shift in the public sector towards opening up publicly-funded information and data to the public which paid for it to be produced. The political momentum behind this cannot be ignored and, while it is focussed on central government departments currently, this focus will inevitably widen to include HEIs.

2. Start designing with data, as well as with pages

The typical CMS is geared towards building Web pages. All modern CMS systems allow content to be managed in 'chunks' smaller than a whole page, such that content such as common headers, sidebars etc. can be re-used across many pages. Nonetheless, the average CMS is ultimately designed to produce Web resources which we would recognise as 'pages'. An HEI's web team will continue to be concerned with the site in terms of pages for human consumption. However, simply by exposing the smaller chunks of information, in machine-readable ways, the CMS can become a platform for engaging with the Web of data. My colleague Thom Bunting describes such possibilities having experimented with one popular CMS, Drupal, in Consuming and producing linked data in a content management system.

3. Develop websites for end-users, developers and software processes

This is a very important principal, and one which is frequently overlooked. Sites which are designed to allow humans to navigate pages are not necessarily accessible to software which might be able to re-process the information in new and useful ways. Widely adopted standard re-presentations of content, such as RSS feeds, have gone a some way to mitigating this. But the principal of designing for these different types of user up-front is one which is not yet widely accepted. Developers, especially, are not yet generally regarded as important users - yet for the Web of data to deliver value to data publishers we require developers to build new services which exploit that data. If you make your data available for re-use, it makes absolute sense to consider the needs of those developers you hope will try to exploit it. A perceived problem with this is that it seems expensive - to develop web-sites for different classes of user in this way. After all, the HEI's web team will already be considering several different sub-classes of human user (students, staff, prospective students, alumni etc.). Human end-users will continue to be the priority audience. However, there are strategies for developing websites in such a way that developers and software are not 'disenfranchised'. An approach which marries these concerns at the beginning, rather than a bolt-on approach of extra interfaces (APIs) for developers and systems is, preferable if a common ' anti-pattern' is to be avoided. The meteoric rise in popularity of Twitter is in no small part due to its developer-friendly website. Twitter has a simple Application Programming Interface (API) which allows developers to build client applications which use the Twitter service but which add value in some way to end-users. This graph at ReadWriteWeb shows how applications built by t?hird-party developers account for mote than half of the usage of Twitter. Some important principals which, if followed, will ensure a website is 'friendly' towards end-users, developers and software are points 4, 5, and 6 below:

4. Identify the important entities and make them addressable, using readable, reliable and hackable URLs

This is crucial - it forms the most important foundation for the Web of data. A traditional, well-designed website will be based on some sort of understood 'information architecture', however simple. The idea of starting with important 'entities' and making sure that they have sensible, managed and reliable identifiers is a somewhat newer approach, yet this is vital for the Web of data to function. The Web of data is, at one level, entirely about identifiers and how they link together. The ability to create ('mint') new identifiers and manage them carefully such that they as usable as possible is a capability which HEI Web teams will need to recognise is important. Identifiers for entities about which the HEI cares will become valuable in their own right in the Web of data. It is already understood that ownership of 'domains' in the Web address space can have value. In the world of business, Web domains change ownership for large sums of money frequently. In the UK the value of the HEI's '.ac.uk' domain is largely connected with reputation. Breaking this down:

- identify the important entities: e.g. courses, units, departments, staff, papers, rooms, learning objects, lectures.... etc.

- make them addressable: give them URLs. For example, if it's a course, mint a URL which points to a unique resource representing that course.

- using readable URLs: make the URL intelligible to an end user. If it's a URL pointing to a course, then a URL which has the word 'course' in it will help.

- using reliable URLs: manage the URLs you mint, and ensure that they are persistent.

- using hackable URLs: make the URLs predictable and consistent, such that a developer can figure out the logical structure of the URLs and the underlying information architecture. As with 'readable URLs' above, do not be cryptic in URLs if this can be avoided

5. Correlate with external identifier schemes

Don't mint your own URLs for things which have been identified elsewhere. Linking to authoritative identifiers is what will create the critical mass in the Web of data - this is diluted every time someone mints a new URL to point to something already identified with a different URL. This aspect of re-using identifiers is explored in Jon Udell's post The joy of webscale identifiers.

6. Consider individual entities, and lists of entities in Web design

URLs can be for lists of related entities, as well as individual entities. All other guidelines apply to this use of URLs. Lists of things are pretty fundamental to the Web (or just about any information system).

Conclusion

If I wanted to abbreviate this even more into three brief instructions, they would be:

- think in terms of information entities, identifiers and relationships, as well as pages

- integrate into the wider Web by re-using existing identifiers and by linking to other information

- realise that developers are a potentially important stakeholder in any modern website