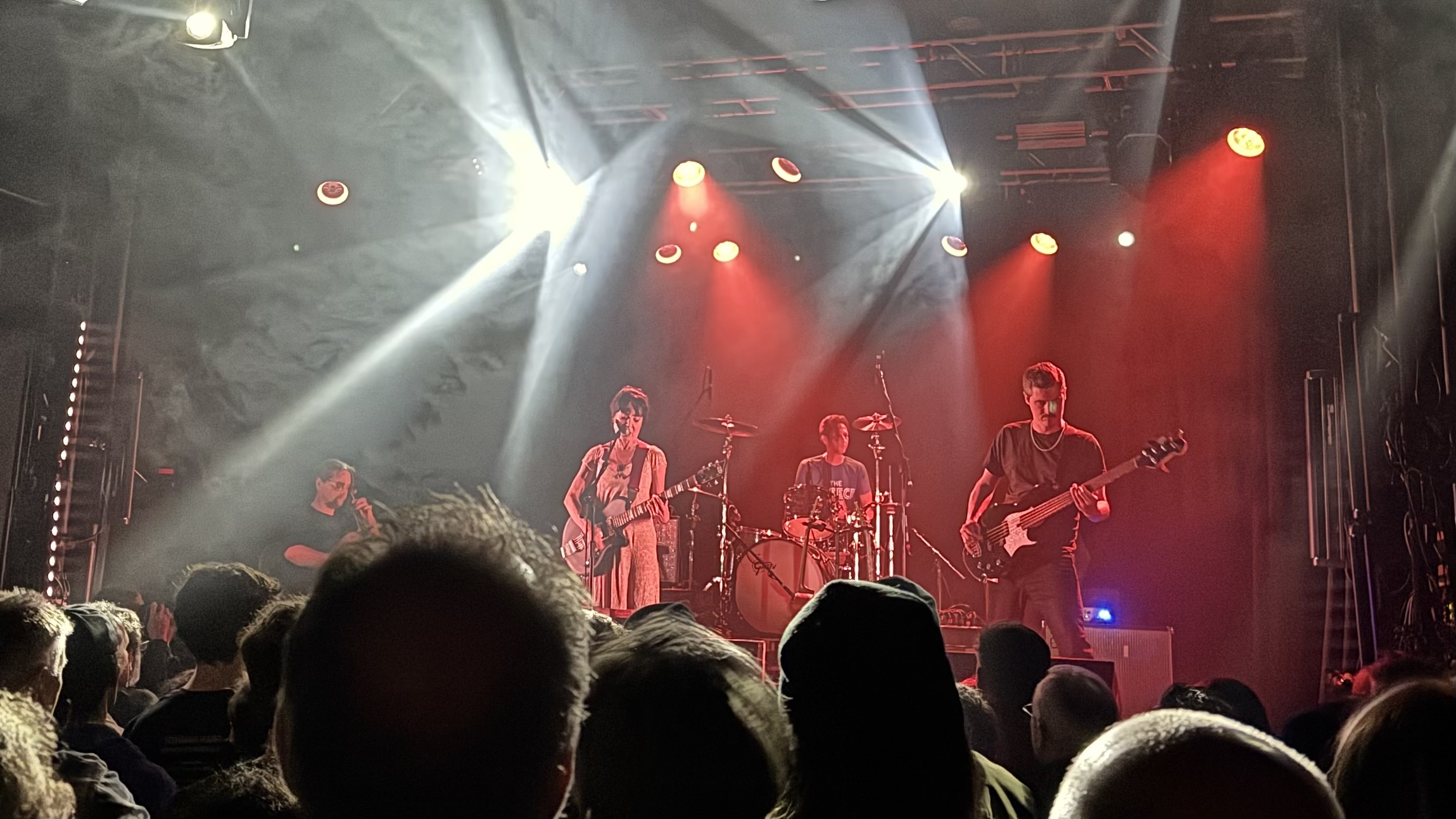

Throwing Muses, Electric Ballroom, Camden

I went to see Throwing Muses play the Electric Ballroom in Camden last night.

This is the third time I have seen them play (I have also seen Kristin Hersh play solo gigs a couple of times). So I guess you could say I'm a fan.

I first saw Throwing Muses at the Portsmouth Polytechnic in 1989. I'm startled to realise this is thirty six years ago. Fast forward to last night, I assumed the crowd would be ageing indie-kids of my generation, and I was mostly right. But standing next to me, as we waited for the band to come on, were a couple of much younger lads. I told them I had been roughly their age when I first saw the band. It turned out 1989 was a dozen years before they were even born! They had been introduced to Throwing Muses by an uncle, so I guess the band is still finding new audiences after all this time.

It was great to see the band again, even if three-quarters of its members have changed (the lineup has always been quite fluid). The heart of Throwing Muses is, and has always been, Kristin Hersh. She is a mesmerising performer - perhaps because she doesn't overtly perform so much as just share her weird, intricate, fascinating songs with us. She is also a great and original guitarist. Her son, Dylan, played bass (he's really good!) and I guess he too wasn't even born in 1989....

I didn't plan to go into this gig thinking about the passing of time, but those were the thoughts that somehow crept up on me. The songs of Throwing Muses and Kristin Hersh have been a constant for me since my mid-teens. It was weird to hear Soap and Water - introduced by Kristin last night as "an old song, and kind of a stupid one!". I can vividly remember hearing that for the first time, in the bedroom of a friend, who had just bought an early EP called The Fat Skier. That EP grabbed my attention like few records have ever done - I think I knew straightaway that I had discovered something special.

Anyway - a great gig, covering decades of songs from a prolific songwriter. Many just sounded great on the night, especially the higher energy songs - Sunray Venus near the start, Slippershell, and Bright Yellow Gun as the perfect final encore. Other songs had a more personal impact - I actually had a little shiver when I recognised the opening of Colder, a song I used to play over and over the summer I bought the vinyl of House Tornado. And similarly - hearing Bea played live and remembering that one from the 1989 gig.

It makes me happy to realise that there are some young folk discovering this music for the first time and, like me nearly forty years ago, realising that its something special.

Here's the crowd-sourced set-list in case you're interested.